Difference between revisions of "Topology of the network"

m (Topology of the network (99,7%) moved to Topology of the network) |

|||

| (6 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

= Context And Goals = | = Context And Goals = | ||

| − | This | + | This task is intended to address and fix some problems inherents to GISS 2.0 which is based on a centralized topology with one central access point, the master server ( giss.tv ). This topology was leading sometimes to a saturation of the system as all the sources could only emit to the central server which upload bandwidth is limited and sometimes got saturated. |

= Work achieved = | = Work achieved = | ||

| Line 48: | Line 48: | ||

[[Image:Server-topo-before.png]] | [[Image:Server-topo-before.png]] | ||

| + | |||

| + | This configuration is based on a central server receiving all incoming streams and relaying it to other servers "on demand". A client/listener can be served by the main server or by a relay when the master server has reached the maximum number of listeners on a mountpoint. In the actual configuration, the maximum number of listeners for each stream is set to 10 ( for virtually 300 streams ). | ||

== New Topology of GISS 3.0 == | == New Topology of GISS 3.0 == | ||

[[Image:Server-topo-after.png]] | [[Image:Server-topo-after.png]] | ||

| + | |||

| + | After the reconfiguration, each source has the option to emit to the secondary server, and a stream can be served by the primary, the secondary or one of the relay servers. | ||

== List of actual servers configuration == | == List of actual servers configuration == | ||

| Line 86: | Line 90: | ||

= Conclusion = | = Conclusion = | ||

| + | |||

| + | This tasks has been completed and seems a very '''scalable''' solution. In case that the traffic would brutally increase ( after the workshop in india? ), it would be possible to mount more secondary servers and there's no more throttle in the system. | ||

Latest revision as of 00:44, 4 June 2008

Contents

Context And Goals

This task is intended to address and fix some problems inherents to GISS 2.0 which is based on a centralized topology with one central access point, the master server ( giss.tv ). This topology was leading sometimes to a saturation of the system as all the sources could only emit to the central server which upload bandwidth is limited and sometimes got saturated.

Work achieved

Upgrade of servers

First, before changing the topology, we started by upgrading our servers to make all software servers coherent. The main server has also been migrated to a more powerful machine ( courtesy of hangar.org ). After the cleaning up of the servers, we reached this configuration :

| Name | Location | Icecast Version | Mode |

|---|---|---|---|

| giss.tv | Paris, France | Icecast 2.3-kh34a | Primary |

| stream.r23.cc | Bergen, Norway | Icecast 2.3-kh34a | Relay |

| thescreen.tv | Vienna, Austria | Icecast 2.3-kh33 | Relay |

| whitmanlocalreport.net | New York, USA | Icecast 2.3.1 | Relay |

| live1.radiovague.com | San Antonio, USA | Icecast 2.3-kh33 | Relay |

| live2.radiovague.com | San Antonio, USA | Icecast 2.3-kh20 | Relay |

| stream.goto10.org | Amsterdam, Netherlands | Icecast 2.3-kh33 | Relay |

| labbs.net | Malmö, Sweden | Icecast 2.3-kh20 | Relay |

| stream.horitzo.tv | Berlin, Germany | Icecast 2.3-kh20 | Standalone |

As you can see, we had one primary server and 7 relays, stream.horitzo.tv is a standalone server but all its activity appears in the map also.

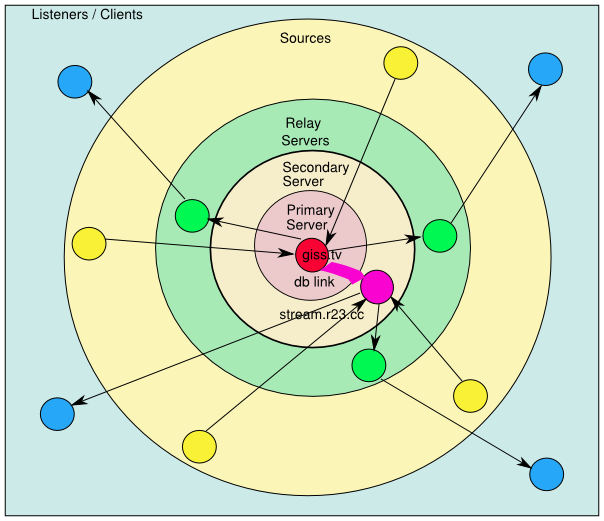

Setting up a secondary server

When a clean list of servers has been established, we installed one of them as a secondary server by doing a database link between the primary and the secondary server, this means that all mountpoints created on the primary server ( giss.tv ) are now also known of the secondary server and thus, a source can emit to a server or the other one.

The database link is a scheduled task that updates mountpoints both on the primary and secondary servers.

Technical Details

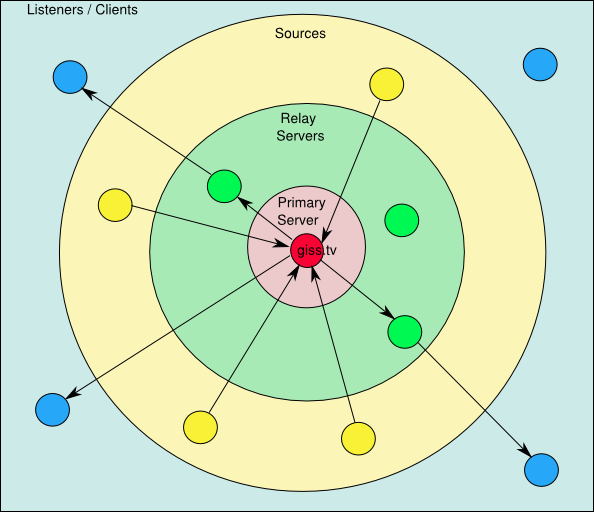

Topology of GISS 2.0

This configuration is based on a central server receiving all incoming streams and relaying it to other servers "on demand". A client/listener can be served by the main server or by a relay when the master server has reached the maximum number of listeners on a mountpoint. In the actual configuration, the maximum number of listeners for each stream is set to 10 ( for virtually 300 streams ).

New Topology of GISS 3.0

After the reconfiguration, each source has the option to emit to the secondary server, and a stream can be served by the primary, the secondary or one of the relay servers.

List of actual servers configuration

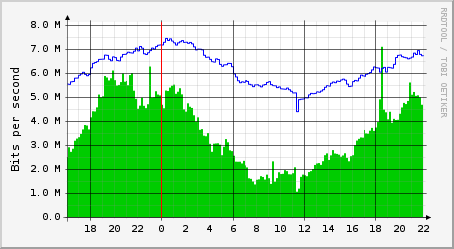

We just changed the mode of one of the servers so that it could be a secondary server, virtually we could have more secondary servers, but, for now, it seems to be sufficient for the upload trafic we get, considering also that the bandwidth of the main server has been increased, here is the grafic of daily traffic on the master server :

So, in the new configuration, each server has the following role :

| Name | Location | Icecast Version | Mode |

|---|---|---|---|

| giss.tv | Paris, France | Icecast 2.3-kh34a | Primary |

| stream.r23.cc | Bergen, Norway | Icecast 2.3-kh34a | Secondary |

| thescreen.tv | Vienna, Austria | Icecast 2.3-kh33 | Relay |

| whitmanlocalreport.net | New York, USA | Icecast 2.3.1 | Relay |

| live1.radiovague.com | San Antonio, USA | Icecast 2.3-kh33 | Relay |

| live2.radiovague.com | San Antonio, USA | Icecast 2.3-kh20 | Relay |

| stream.goto10.org | Amsterdam, Netherlands | Icecast 2.3-kh33 | Relay |

| labbs.net | Malmö, Sweden | Icecast 2.3-kh20 | Relay |

| stream.horitzo.tv | Berlin, Germany | Icecast 2.3-kh20 | Standalone |

Conclusion

This tasks has been completed and seems a very scalable solution. In case that the traffic would brutally increase ( after the workshop in india? ), it would be possible to mount more secondary servers and there's no more throttle in the system.